When you start your Django server you are starting a process that will serve web requests synchronously. This is the main process that does the HTTP request/response cycle.

A user interacting with your web app might initiate a simple request that can be handled immediately or they might request a more time-consuming action like processing some data, editing a photo or confirming their email address.

In this case if you're only running Django with the 1 main HTTP process, the web app on the front end will just be in a loading state while the action requested gets executed in the backend. This is not a good user experience.

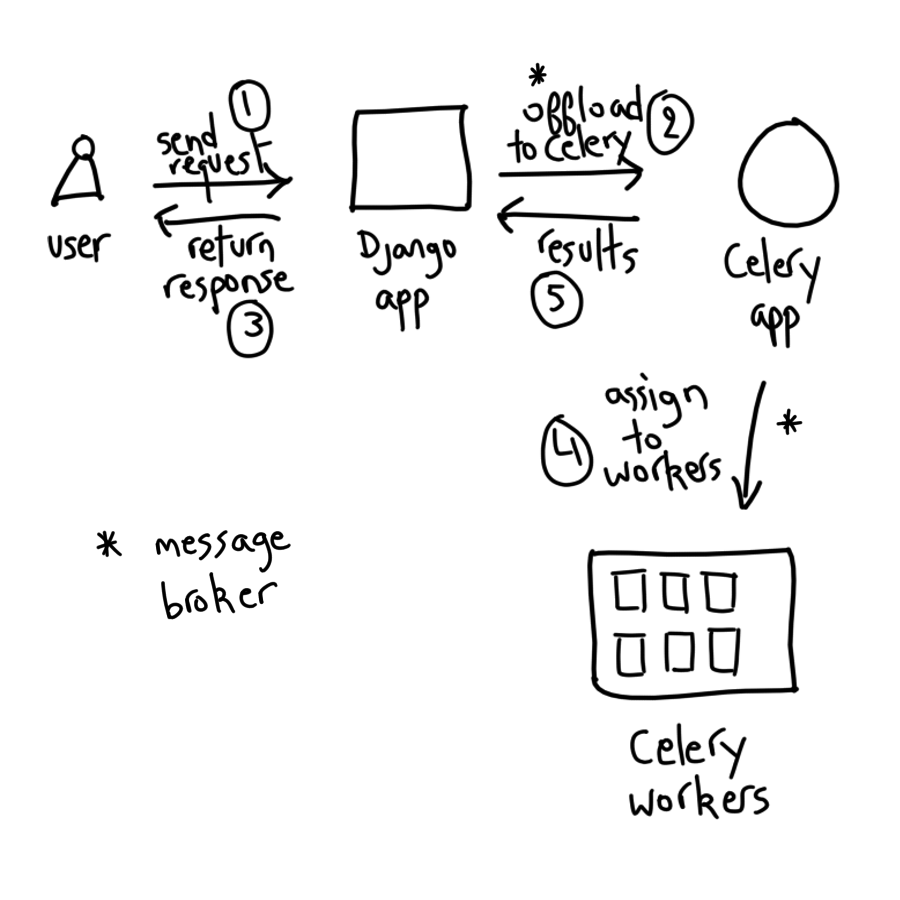

To use Django in a more asynchronous approach, don't handle the time consuming request in the main process and instead offload it to a task queue where it will be executed in the background while your main process is free to receive other user requests. This improves the user experience.

Celery is 1 way to do this. It is an open-source asynchronous task queue that can be used to execute tasks outside of Django's HTTP request/response cycle. Celery makes use of message brokers for communication.

Here's how it works:

Here's how it works:

We need to define the Celery app or instance for the Django project. The recommended way to do this is to create a celery.py file in the main folder of our Django project.

In celery.py, we first pass the settings module to the Celery program. We then create the Celery app instance. We'll add the Django settings module as configuration source for Celery. This configures Celery directly from the Django settings.

Celery configuration is specified using uppercase CELERY in the settings.py file. Celery will autodiscover tasks from all installed apps in our Django project following the tasks.py convention.

We then update the __init__.py file that lives in the main project folder. In this file we will import the Celery app we created. By importing it in __init__.py we ensure that the Celery app gets loaded when Django starts. This will allow us to initiate Celery tasks throughout our Django code.

We also update settings.py to specify a Celery variable where the message broker will be running.

We then create a tasks.py file in each Django app, in this case we only have the user app. If you're just testing this out, you can use the sleep command to imitate a long running process.

In views.py we will import the task we created in the tasks file. Here we create 2 views, the first will use Celery to execute the task and the second will not use Celery and will execute the sleep command as it is.

We also create 2 URLs, 1 for each view.

We need to start the RabbitMQ Docker container that will play the role of the message broker.

Also start the Celery worker from within the Django project.

You'll now see Celery in action with Django. When the Django app is running, we can request the without_celery view and see how it takes time to load in the browser.

We can also request the with_celery view and see how it instantly loads because the task is offloaded to Celery and is executed in the worker terminal.

I hope this introduction to Celery shows you how powerful it can be to implement something like this to optimize your Django app.

To view a video format of this tutorial, you can check Celery for asynchronous tasks in Django on Youtube. You can also find the code on Github.